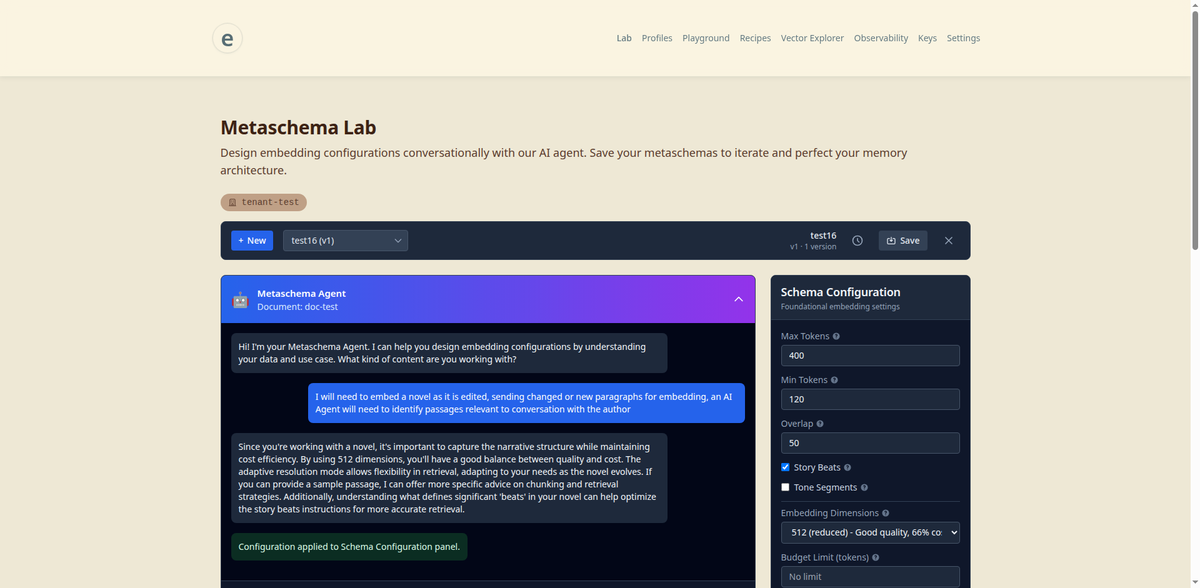

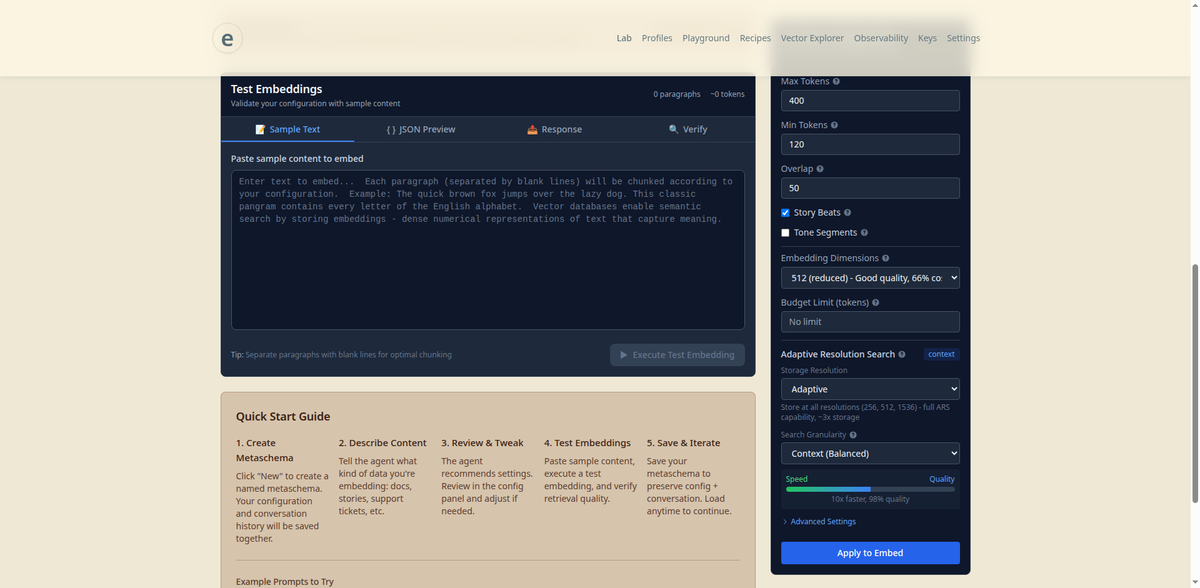

Power users: Full control when you need it

The conversational interface is a starting point. At any time, you can drop into the raw schema and tune every knob:

{

"layers": {

"baseline": {

"chunker": "fixed_token",

"chunk_size": 800,

"overlap": 160,

"preserve_sentence_boundaries": true

},

"structural": {

"metadata_fields": ["speaker", "timestamp", "turn_id", "utterance_type"],

"classification": {

"utterance_type": {

"model": "classifier_v1",

"classes": ["assertion", "question", "speculation"]

}

}

},

"story_beat": {

"model": "narrative_v1",

"boundary_detection": "auto",

"min_beat_tokens": 200,

"preserve_arc_context": true

}

},

"retrieval": {

"strategy": "hybrid",

"dense_weight": 0.7,

"bm25_weight": 0.3,

"rerank": { "model": "cross_encoder_v2", "top_n": 12 },

"filters": {

"utterance_type": ["assertion"],

"min_confidence": 0.7

},

"recency_boost": { "decay_factor": 0.85 }

}

}

Conversational when you want it. Programmatic when you need it. The Metaschema Agent understands both.